It has now been more than six months after the launch of ChatGPT. Its widespread accessibility – thanks to so-called generative Artificial Intelligence (AI) such as Bard, Claude, Dall-E, or Midjourney – makes it one of the hottest digital topics of 2023. From geeks, to school teachers, to grandparents – everyone is talking about it. The opportunities AI brings are covered extensively in the press – as are the fears it raises. While AI has long been mastering everyday tasks, making scientific breakthroughs and developing creative solutions to the challenges of our time, its pace of development and its ability to learn autonomously are impressive. What does our (near) future look like with digital intelligence? Will AI one day also be able to think and act ethically? And what consequences would this have for us humans? Should we be optimistic or fearful? These are the questions we asked the attendees of our event “Künstlich und Intelligent?” held in collaboration with SRF in June 2023.

One thing is clear: The public’s greatest fear about AI is that it can be misused. In fact, a lot of people realise the massive potential this technology represents, and how it can just as easily be used for the wrong purposes such as in cyberattacks, data manipulation, social media manipulation, hacking of information systems, hospitals or cars – just to name a few. In this sense, many have also raised fears regarding distortion of reality, misinformation, proliferation of fake news that can all have major implications for our democratic society. The threat of hidden manipulation by AI, lack of transparency over systems and algorithms are all growing concerns that will undoubtedly have to be addressed quickly. In addition, and similarly to other technologies, people have also questioned the responsibility and accountability in the development of AI.

Therefore, for many, adopting norms and regulations (as it is currently ongoing at the European level), including a framework favourable to the development of these technologies while limiting the risks of abuse was highlighted as approaches to reduce fears in the population.

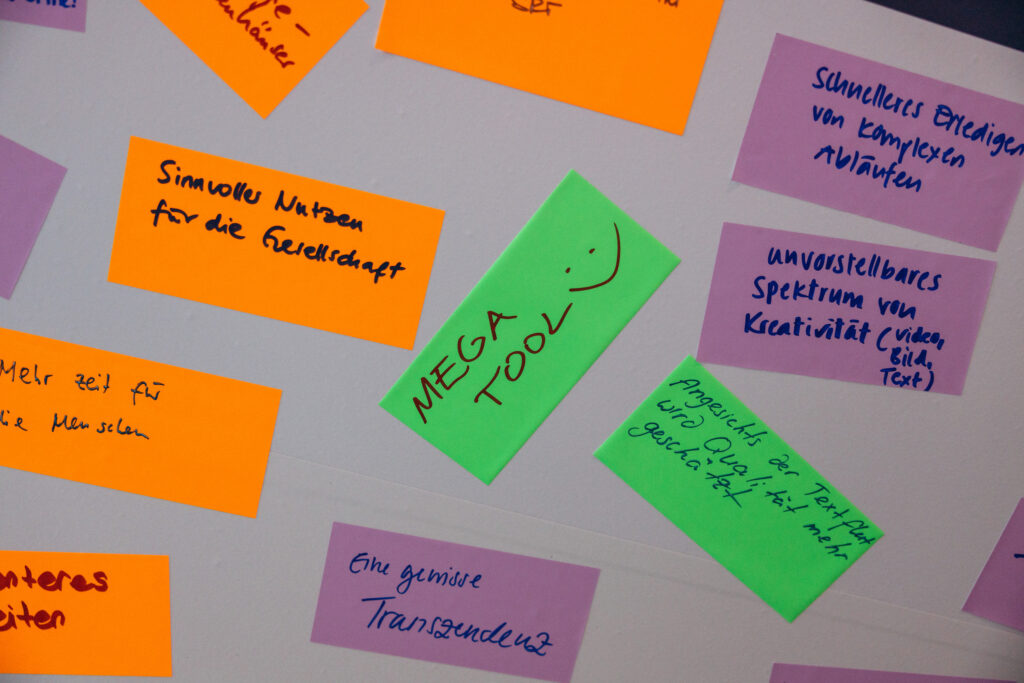

Most people recognise the positive potential of AI. While they don’t expect to understand the technology in detail, they definitely hope to be able to use it to make their daily lives easier. The hope of increased productivity (i.e., reduce repetitive and less demanding intellectual tasks) was by far the most positive aspect shared by the attendees. Indeed, AI can be an enormous support tool, in particular at work, to simplify complex procedures and can surely increase creativity (i.e. video, image, text). Other people also noted how AI can open up new possibilities, such as further advances in science and research, support for global threats (e.g. sustainable solutions to climate change via intelligent irrigation systems).

Humans have always adapted to new technologies. Nevertheless, the speed of technological change today is unprecedented. Trying to keep up with this pace can lead to feelings of being overwhelmed. It can even lead to fear – fear that technology could escape society’s control – as mentioned several times by the attendees. That is why we asked them what it would take to overcome their fears:

The most pressing need expressed was the need for transparency: most of the participants explained how transparent information, awareness and communication is essential to create understanding about how AI algorithms and systems are built and work. This is key to building trust. Another important need that was raised several times was education. Educating the population on how to make use of AI through learning, sharing of experiences, exploring, and in particular, promoting critical thinking skills. Others highlighted the need for governance and regulations to ensure responsible design and use of AI through means of international agreements, implementation of ethical filters and rules. Some attendees raised the need for verification mechanisms to prevent the spread of misinformation. This could, for example, be achieved through fact-checkers, or cross-referencing of other reputable sources.

Overall, as with most new technological breakthroughs, there is always a period of adaptation before society accepts it, integrates it and benefits from its full potential. Many questions will remain open for now and will inevitably be addressed in the near future. From a societal perspective, one of the main challenges will be to ensure everyone has access to these new technologies as well as learning tools. Finally, one of the very specific characteristics of new, AI-driven, digital technologies is the speed of their deployment (ChatGPT has been used by more than a million people in about two months) and their transversality (i.e. the fact that they impact almost all aspects of our private and professional lives). One of the associated big challenges is to find adequate (legal and societal) adoption mechanisms able to efficiently cope with the speed and broadness of the ongoing changes.

Table of Content

Table of Content